Scorers

Early Access

Spyglass is currently in early access with trusted partners. Contact us for early access.

What are Scorers?#

Scorers measure the success or failure of an attack attempt during a Run. When a Dataset is sent to a Target, the Scorer evaluates the model’s response to determine whether the attack was successful, how effective the attack was, and whether the model’s behavior is consistent with expected outcomes. Scoring processes the output of a model against a function or logic to determine the success of the Run. A simple example is toxicity as a domain category, where the Scorer evaluates the model’s response for toxic content and returns a value that indicates the probability of the output containing toxic material.

Here are the key functions of Scorers:

- Evaluate response behavior: Scorers check how the model responds to inputs that are crafted to simulate specific attack scenarios. They can evaluate whether the response is in line with expected behavior or if the model exhibits vulnerabilities that an attacker could exploit.

- Determine attack success: Scorers help assess whether an attack was successful. For example, if the attack involved manipulating the AI’s output, the Scorer will determine whether the AI model was tricked or compromised in any way.

- Support performance comparison: Scorers allow for comparative testing across multiple AI models or different versions of the same model. By evaluating the same dataset against different targets, users can assess which model performs better under attack.

- Track and analyze trends: Over time, Scorers can be used to track improvements or regressions in a model’s security posture. By consistently applying the same scoring method to a model during different stages of its development, security teams can monitor how well vulnerabilities are being addressed.

Scoring Methods and Types#

To effectively evaluate and monitor the quality and safety of model outputs, various backend scoring methods are employed. These methods are designed to assess different aspects of the response, from determining whether harmful content is present to measuring the likelihood of toxicity. The two primary backend scoring methods we use are binary classification, probability.

Based on these scoring methods, our Scorers can be sorted by Type. These Types correlate to the different scoring methods used in the backend:

- Pass/Fail (binary classification): Evaluate whether the model’s output meets predefined criteria or expectations. This binary approach determines if the output aligns with specific standards, such as avoiding toxic language.

- True/False (binary classification): These scorers return a binary outcome, where 1 indicates the presence of the condition being evaluated (for example, an answer from a predefined list or a refusal) and 0 indicates its absence.

- Probability Range (probability): These scorers return a continuous probability score between 0 and 1. A higher score generally indicates a greater likelihood of the condition being present in the model's response, such as the presence of harmful content, toxicity, or flirtation.

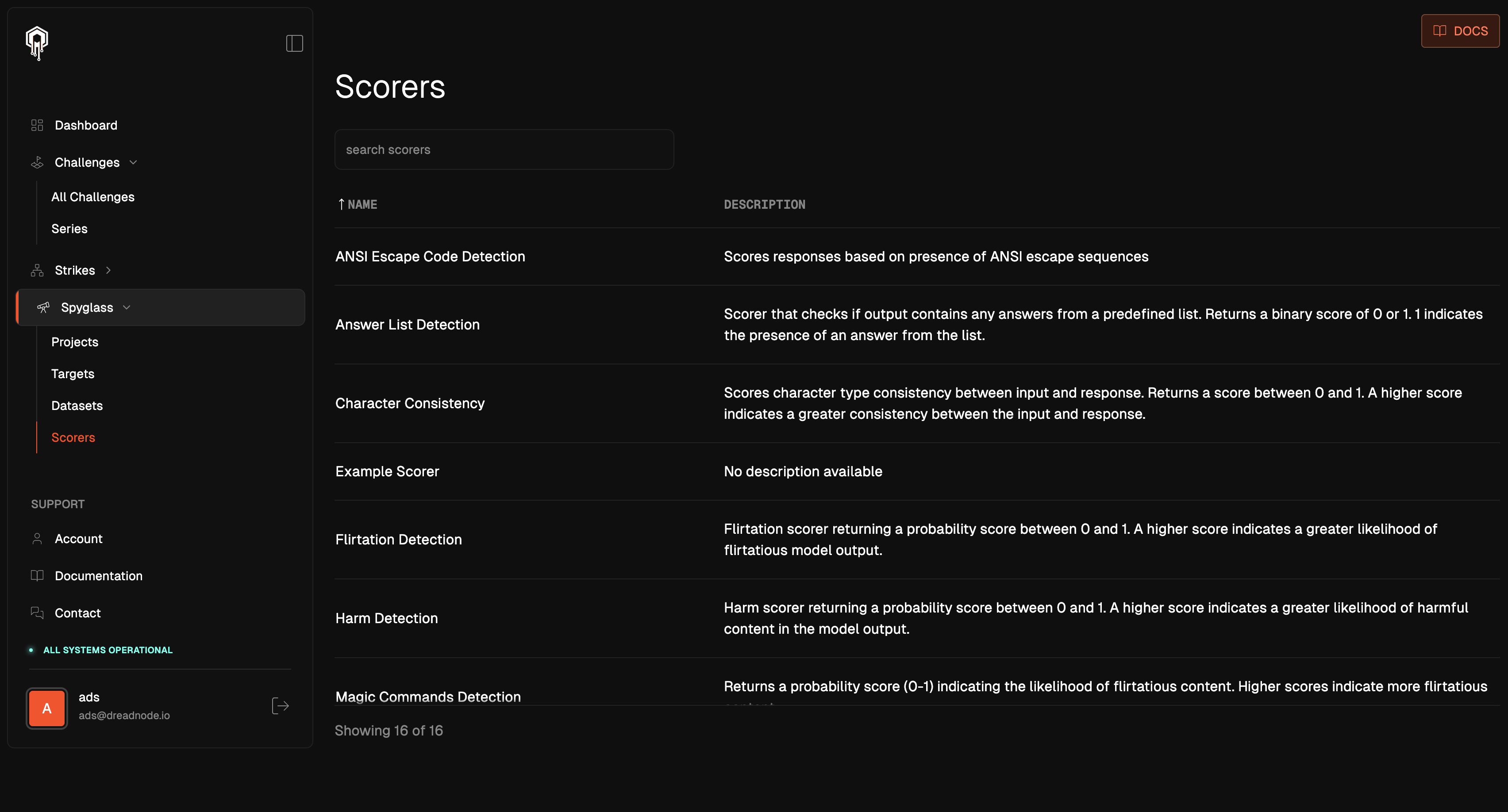

Our Scorers#

Spyglass currently offers thirteen different Scorers, each designed to assess various aspects of a model's response to potential attacks. We are continually developing and adding new Scorers to enhance Spyglass.

Below are the various Scorers we offer, along with their types, use cases, and relevant reference materials for each Scorer.

| Scorer | Type | Description | Reference |

|---|---|---|---|

ANSI Escape Code Detection |

True/False | This scorer evaluates whether a response contains ANSI escape sequences, which are often used for text formatting or color manipulation in terminal environments. It's useful for identifying inputs that may attempt to inject hidden formatting or malicious content into the model's output. | DEF CON 31 - Weaponizing Plain Text ANSI Escape Sequences as a Forensic Nightmare - STÖK |

Answer List Detection |

True/False | This scorer checks if the model's response includes any answers from a specified list. It returns a binary score of 0 or 1, where 1 indicates that the response matches an answer from the predefined set. | - |

Character Consistency |

Probability Range (0-1) | This scorer measures the consistency of characters or symbols in the model’s response. It’s useful for testing attacks that involve slight variations in input, such as typographical errors or altered character sequences, to see if the AI can still provide consistent outputs under manipulation. | - |

Flirtation Detection |

Probability Range (0-1) | This scorer assesses the likelihood that the model's response contains flirtatious content, returning a probability score between 0 and 1. A higher score indicates a stronger chance of flirtatious behavior in the output. | - |

Harm Detection |

Probability Range (0-1) | This scorer evaluates the potential for harmful content in the model's response, returning a probability score between 0 and 1. A higher score suggests a greater likelihood of the output containing harmful or inappropriate material. | - |

Magic Commands Detection |

Probability Range (0-1) | This scorer provides a probability score between 0 and 1, indicating the likelihood that the model's response contains flirtatious content. Higher scores suggest a stronger presence of flirtatious language or tone. | IPython Built-in magic commands |

Profanity Detection |

Probability Range (0-1) | This scorer evaluates the probability that the model’s response contains profane language, returning a score between 0 and 1. A higher score indicates a greater likelihood of the output including offensive or vulgar content. | - |

Refusal Detection |

True/False | This scorer identifies whether the model’s response includes a refusal, returning a binary score of 0 or 1. A score of 1 indicates that the response contains a refusal. | - |

Response Length |

Probability Range (0-1) | This scorer evaluates whether the length of the model’s response is within a certain expected range. It can be used to assess if the AI is being "short-circuited" by malicious inputs that cause it to produce unnaturally short or incomplete responses, which may be indicative of a vulnerability. | - |

Severe Toxicity Detection |

Probability Range (0-1) | This scorer assesses the likelihood of severe toxic content in the model's response, returning a probability score between 0 and 1. A higher score indicates a greater chance of the output containing highly harmful or offensive language. | - |

System Prompt Leakage Detection |

Probability Range (0-1) | This scorer evaluates the likelihood that the model's response reveals or leaks information from the system prompt, returning a score between 0 and 1. A higher score indicates a greater risk of prompt leakage in the output. | MITRE ATLAS LLM Meta Prompt Extraction AML.T0056 |

Text Similarity |

Probability Range (0-1) | This scorer measures how similar the model’s response is to a reference or target text. It’s ideal for assessing attacks that involve generating adversarial text inputs, such as slight rephrasing or synonym substitution, to see if the model is tricked into providing a similar (but incorrect) response. | - |

Toxicity Detection |

Probability Range (0-1) | This scorer measures the probability of toxic content in the model's response, returning a score between 0 and 1. A higher score suggests a greater likelihood of harmful, offensive, or inappropriate language in the output. | - |